背景:

业务部门获取了公司最近一个月电信客户信息(通讯信息、个人信息),想通过数据部门建模预测用户未来是否流失

数据源:teleco.csv

样本量:1000

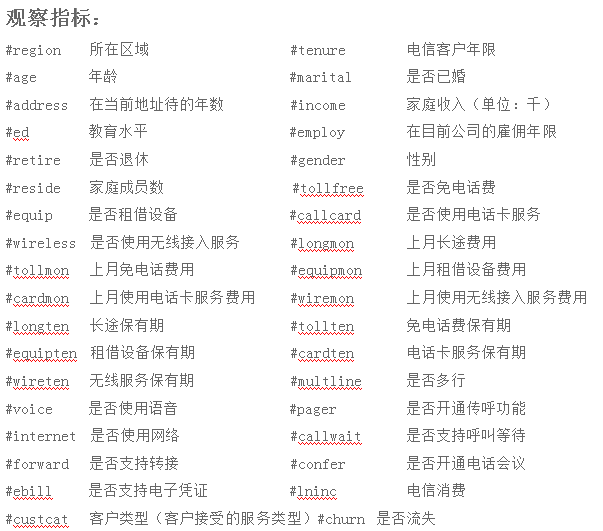

观察指标

建模方法: BP 神经网络/RBF 神经网络

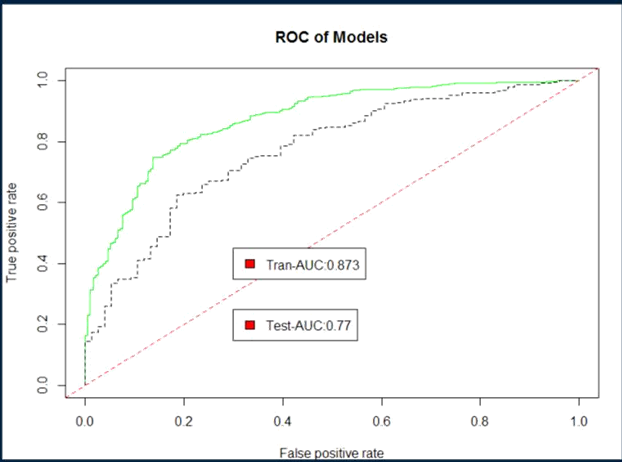

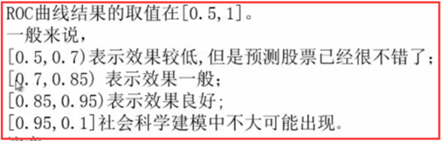

指标评估:ROC 曲线 --用来描述模型分辨能力,对角线以上的图形越高越好

建模结论

建模过程

>---------------------------------BP 神经网络建模-------------------------------

> #1.数据清洗

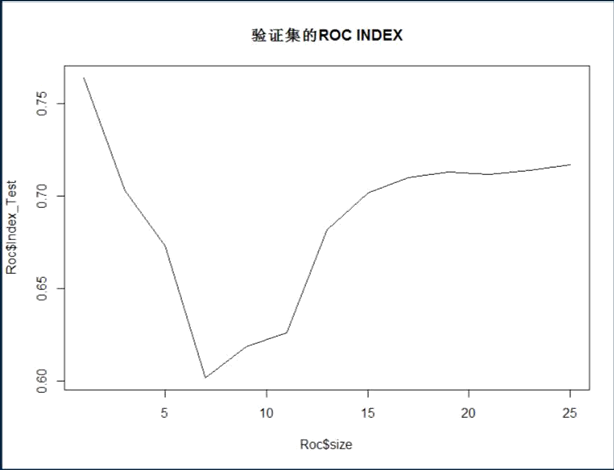

> #2.size 从 1~23 循环找到最佳 size 为 19

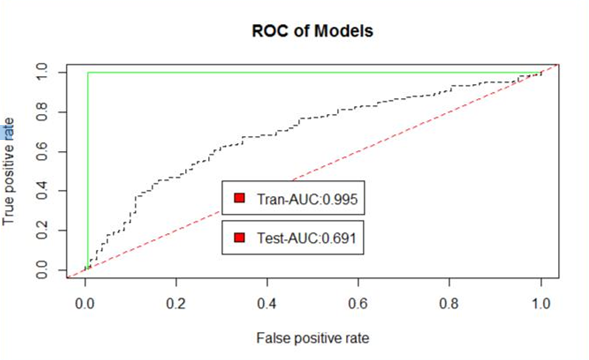

> #3.得到较为合理的模型 model_nnet #4.训练集 ROC 为 0.995,验证集 ROC 为 0.691,训练集和验证集存在过

度拟合,训练集模型效果好,验证集合模型效果一般

>

> setwd('E:R 数据挖掘实战第四周data 数据')

> library(sqldf)

> #导入数据和数据清洗

> data names(data)

[1] "region" "tenure" "age""marital" "address"

"income" "ed" "employ" "retire" "gender"

[11] "reside" "tollfree" "equip" "callcard" "wireless" "longmon" "tollmon" "equipmon" "cardmon" "wiremon" [21] "longten" "tollten" "equipten" "cardten" "wireten"

"multline" "voice" "pager" "internet" "callwait" [31] "forward" "confer" "ebill" "lninc" "custcat" "churn"

> interval_var = c('income','longten','tollten','equipten ','cardten','wireten')

> for (i in interval_var){

+ data[,i] = gsub(',','',data[,i])

+ data[,i] = as.numeric(data[,i])

+ }

> #对 Y--是否流失(分类变量)替换

> data #验证数据类型是否都为数值型

> library(dfexplore)

> dfexplore::dfplot(data)

> write.csv(data,"datanowone.csv")

> #size 从 1~22 循环,找到最佳 size 为 19

> Network<-function(maxNum,formula,sizeNum,DataSet,sample

rate){

+ library(nnet)

+ library(ROCR)

+ set.seed(100)

+ select<-sample(1:nrow(data),ceiling(nrow(data)*sample rate))

+ train=data[select,]

+ test=data[-select,]

+ st_range <- function(x) {

+ return((x - min(x)) / (max(x) - min(x)))

+ }

+ train[,1:35]<- as.data.frame(lapply(train[,1:35], st_r ange))

+ test[,1:35]<- as.data.frame(lapply(test[,1:35], st_ran ge))

+ ROC<-data.frame()

+ for (i in seq(from =1,to =sizeNum+1,by =2)){

+ model_nnet<-nnet(formula, linout = F,size = i, decay = 0.01, maxit = maxNum,trace = F,data = train)

+ train$lg_nnet_p<-predict(model_nnet, train)

+ test$lg_nnet_p<-predict(model_nnet, test)

+ pred_nnet_Tr <- prediction(train$lg_nnet_p, train$y)

+ perf_nnet_Tr <- performance(pred_nnet_Tr,"tpr","fpr")

+ pred_nnet_Te <- prediction(test$lg_nnet_p, test$y)

+ perf_nnet_Te <- performance(pred_nnet_Te,"tpr","fpr")

+ lr_m_auc_Tr<-round(as.numeric(performance(pred_nnet_ Tr,'auc')@y.values),3)

+ lr_m_auc_Te<-round(as.numeric(performance(pred_nnet_ Te,'auc')@y.values),3)

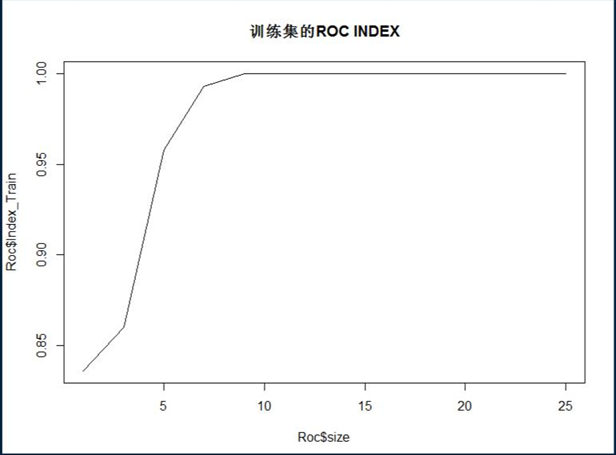

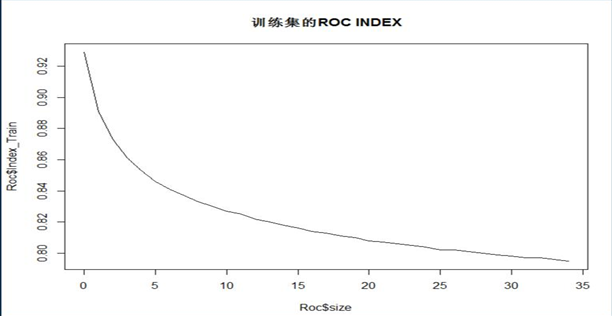

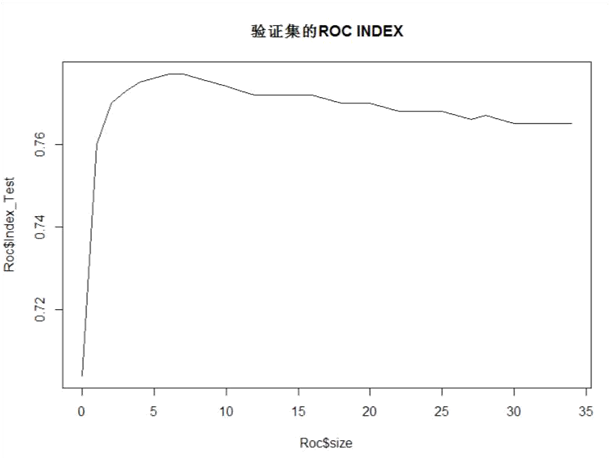

+ out plot(Roc$size,Roc$Index_Train,type="l",main="训练集的 ROC INDEX")

plot(Roc$size,Roc$Index_Test,type="l",main="验证集的 ROC INDEX")

> Proc Proc Roc.size Roc.Index_Train Roc.Index_Test 1 1 0.836 0.764 2 3 0.860 0.703 3 5 0.958 0.673 4 7 0.993 0.602 5 9 1.000 0.619 6 11 1.000 0.626 7 13 1.000 0.682 8 15 1.000 0.702 9 17 1.000 0.710 10 19 1.000 0.713 11 21 1.000 0.712 12 23 1.000 0.714 13 25 1.000 0.717 > #用循环得到的最优 size=19,建模 > data data set.seed(10) > select train=data[select,] > test=data[-select,] > #极差标准化函数 > st_range train[,1:35] test[,1:35] > library(nnet) > model_nnet pre.forest=predict(model_nnet, test) > out=pre.forest > out[out out[out>=0.5]=1 > rate2 rate2 [1] 0.6966667 > #ROC 绘图 > train$lg_nnet_p test$lg_nnet_p library(ROCR) > pred_nnet_Tr perf_nnet_Tr pred_nnet_Te perf_nnet_Te plot(perf_nnet_Tr,col='green',main="ROC of Models") > plot(perf_nnet_Te, col='black',lty=2,add=TRUE); > abline(0,1,lty=2,col='red') > lr_m_auc lr_m_str legend(0.3,0.45,c(lr_m_str),2:8) > lr_m_auc lr_m_ste legend(0.3,0.25,c(lr_m_ste),2:8)

---------------------------使用径向基神经网络建模----------------------------------------------------------

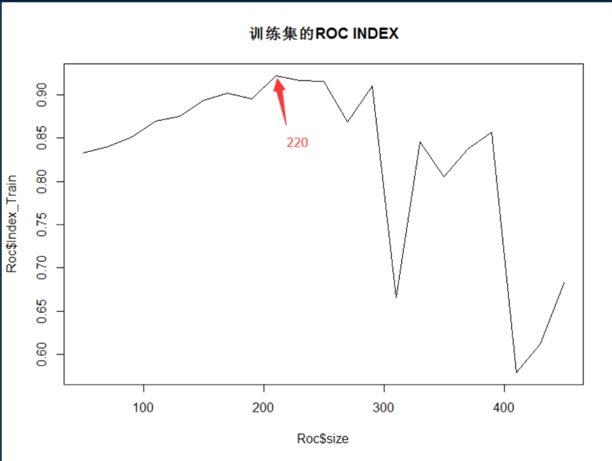

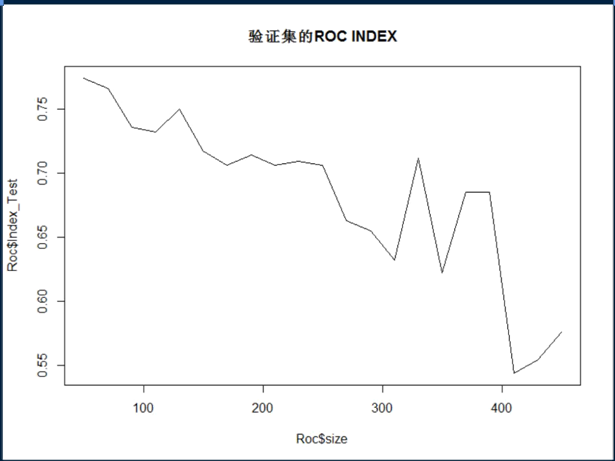

> #1.循环 1,size 从 50~450 循环(间隔 20),确定训练集对应的 ROC 最大值——对应的最佳 size 值:220

> #2.循环 2,在确定最佳 size 的基础上,P 值从 0.1~2 循环(间隔 0.1),找到训练集的 ROC 最大值——对应的 P 值:0.3

> #3.循环 3,前两次最优循环值,模型仍有过度拟合现象,惩罚项从 0 到 66 循环 66 次,找到验证集的 ROC 明显提升,训练集 ROC 影响不大的惩罚值:6

> #4.通过前 3 次的循环找到最佳模型,训练集的 ROC:0.873,验证集合的 R OC:0.77,从 ROC 的值表现来看模型效果一般

> #model #-----size 从 50~450 循环(间隔 20),寻找最佳 size 为 220-----

> Network<-function(maxNum,sizeNum,DataSet,samplerate){

+ library(nnet)

+ library(ROCR)

+ set.seed(100)

+ select<-sample(1:nrow(data),ceiling(nrow(data)*sample rate))

+ train=data[select,]

+ test=data[-select,]

+ #进行极差标准化

+ st_range <- function(x) {

+ return((x - min(x)) / (max(x) - min(x)))

+ }

+

+ train[,1:35]<- as.data.frame(lapply(train[,1:35], st_r ange))

+ test[,1:35]<- as.data.frame(lapply(test[,1:35], st_ran ge))

+ x<-train[,1:35]

+ y<-train[,36]

+ ROC<-data.frame()

+ for (i in seq(from =50,to =sizeNum+1,by =20)){

+ model <- rbf(x, y, size=i, maxit=maxNum,linOut=F,init Func = "RBF_Weights",initFuncParams=c(-4, 4, 0, 0.01, 0) , learnFuncParams=c(1e-8, 0, 1e-8, 0.1, 0.8))

+ train$lg_nnet_p<-predict(model,train[,1:35])

+ test$lg_nnet_p<-predict(model, test[,1:35])

+ pred_nnet_Tr <- prediction(train$lg_nnet_p, train$y)

+ perf_nnet_Tr <- performance(pred_nnet_Tr,"tpr","fpr

")

+ pred_nnet_Te <- prediction(test$lg_nnet_p, test$y)

+ perf_nnet_Te <- performance(pred_nnet_Te,"tpr","fpr

")

+ lr_m_auc_Tr<-round(as.numeric(performance(pred_nnet_ Tr,'auc')@y.values),3)

+ lr_m_auc_Te<-round(as.numeric(performance(pred_nnet_ Te,'auc')@y.values),3)

+ out plot(Roc$size,Roc$Index_Test,type="l",main="验证集的 ROC INDEX")

> #-P 值从 0.1~2 循环(间隔 0.1),找到训练集的 ROC 最大对应的 P 值为0.3

> Network<-function(maxNum,sizeNum,DataSet,samplerate){

+ library(nnet)

+ library(ROCR)

+ set.seed(100)

+ select<-sample(1:nrow(data),ceiling(nrow(data)*sample rate))

+ train=data[select,]

+ test=data[-select,]

+ st_range <- function(x) {

+ return((x - min(x)) / (max(x) - min(x)))

+ }

+

+ train[,1:35]<- as.data.frame(lapply(train[,1:35], st_r ange))

+ test[,1:35]<- as.data.frame(lapply(test[,1:35], st_ran ge))

+ x<-train[,1:35]

+ y<-train[,36]

+ ROC<-data.frame()

+ for (i in seq(from =0.1,to =sizeNum+1,by =0.1)){

+ model <- rbf(x, y, size=220, maxit=maxNum,linOut=F,in itFunc = "RBF_Weights",initFuncParams=c(-4, 4, 0, i, 0) ,l earnFuncParams=c(1e-8, 0, 1e-8, 0.1, 0.8))

+ train$lg_nnet_p<-predict(model,train[,1:35])

+ test$lg_nnet_p<-predict(model, test[,1:35])

+ pred_nnet_Tr <- prediction(train$lg_nnet_p, train$y)

+ perf_nnet_Tr <- performance(pred_nnet_Tr,"tpr","fpr

")

+ pred_nnet_Te <- prediction(test$lg_nnet_p, test$y)

+ perf_nnet_Te <- performance(pred_nnet_Te,"tpr","fpr

")

+ lr_m_auc_Tr<-round(as.numeric(performance(pred_nnet_ Tr,'auc')@y.values),3)

+ lr_m_auc_Te<-round(as.numeric(performance(pred_nnet_ Te,'auc')@y.values),3)

+ out plot(Roc$size,Roc$Index_Test,type="l",main="验证集的 ROC INDEX")

> Proc Proc #惩罚值=2

Roc.size Roc.Index_Train Roc.Index_Test

1 0 0.929 0.704

2 1 0.891 0.760

3 2 0.873 0.770

4 3 0.861 0.773

5 4 0.853 0.775

6 5 0.846 0.776

7 6 0.841 0.777

8 7 0.837 0.777

9 8 0.833 0.776

10 9 0.830 0.775

11 10 0.827 0.774

12 11 0.825 0.773

29 28 0.800 0.767

30 29 0.799 0.766

31 30 0.798 0.765

32 31 0.797 0.765

33 32 0.797 0.765

34 33 0.796 0.765

35 34 0.795 0.765

> #------将三次循环的结果得到的最佳 size,P 值,惩罚项,得出较为合理的径向基神经网络模型---------

> setwd('E:R 数据挖掘实战第四周data 数据')

> data data dfexplore::dfplot(data)

> #随机抽样,建立训练集与测试集

> set.seed(100)

> select train=data[select,]

> test=data[-select,]

> library("RSNNS")

> st_range train[,1:35] test[,1:35] x y model plotIterativeError(model)

> train$lg_nnet_p test$lg_nnet_p library(ROCR)

> pred_nnet_Tr perf_nnet_Tr pred_nnet_Te perf_nnet_Te plot(perf_nnet_Tr,col='green',main="ROC of Models")

> plot(perf_nnet_Te, col='black',lty=2,add=TRUE);

> abline(0,1,lty=2,col='red')

> lr_m_auc lr_m_str legend(0.3,0.45,c(lr_m_str),2:8)

> lr_m_auc lr_m_ste legend(0.3,0.25,c(lr_m_ste),2:8)